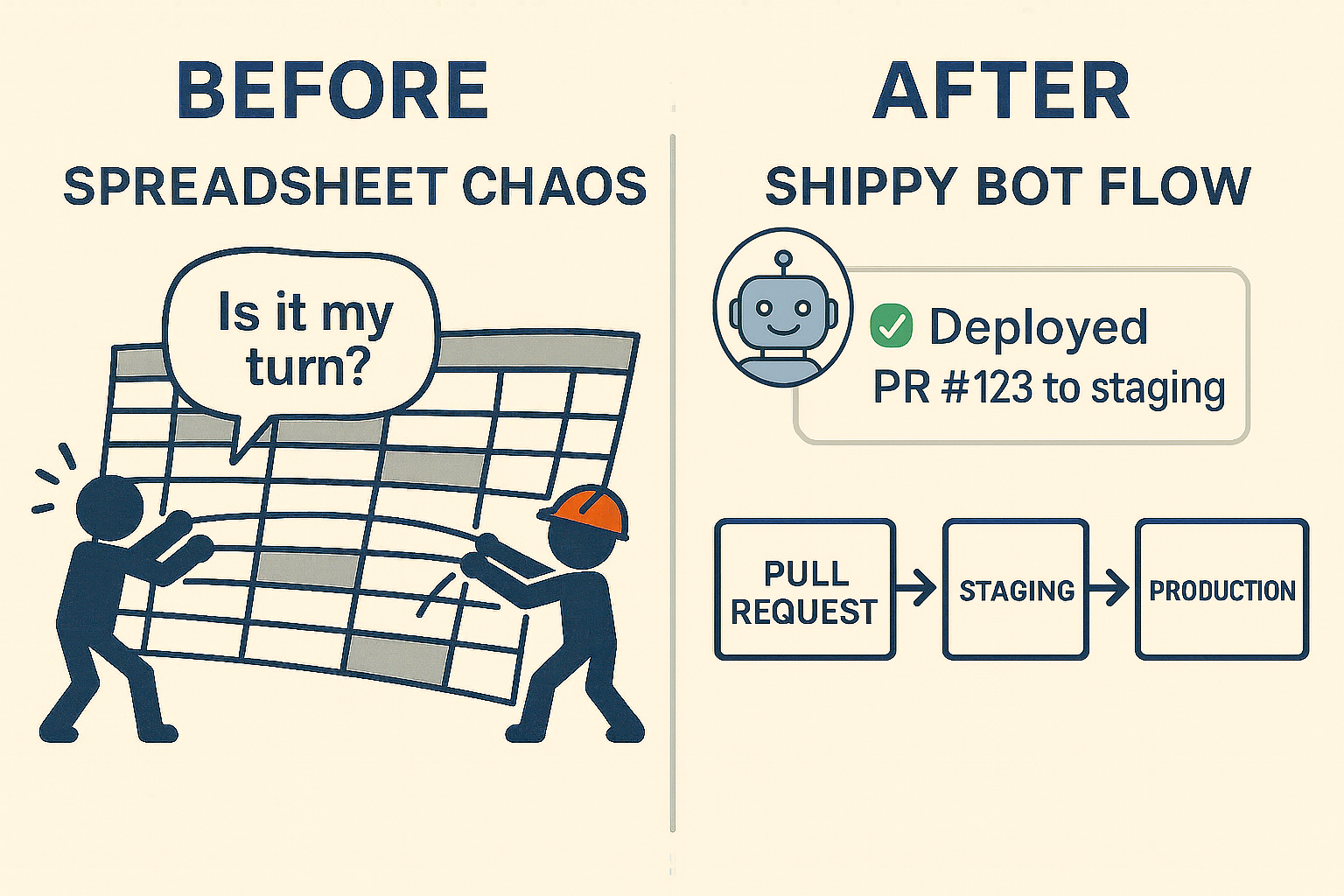

I wrote my first chatbot in anger. Our engineering team had recently shifted from shipping one massive release each week to deploying pull requests individually, releasing multiple changes daily. We managed the deployment queue through a shared spreadsheet, which felt like punishment. Every edit turned into a tug-of-war between teams, often leaving DevOps out of the loop. Even with a dedicated Slack channel, priority changes slipped through the cracks. Frustrated with the situation, I hacked together a Slack bot one afternoon to take over the queue. I named it after a mascot created by a team member, calling it “Shippy” and giving it a silly logo-inspired avatar.

What began as a quick fix to save my sanity reshaped how I think about automation. The first version of the bot was crude, but it proved a point: automation doesn’t have to be perfect to be transformative. Removing a small but painful friction freed the team to focus on shipping instead of squabbling. That lesson stuck with me. If a few lines of code could change how an entire engineering team worked daily, what else could automation unlock at scale?

That small spark of rebellion has recently lit my path from simple chatbots to AIOps.

Building Trust in DevOps Automation

“Shippy” began to evolve quickly. After managing the deployment queue, I added features so the bot could check the status of the associated pull request. Eventually, it evolved into a complete ChatOps tool that allowed our developers to promote code from integration to production without DevOps intervention.

It worked. Developers gained autonomy. Releases moved faster. And our DevOps team stopped being the bottleneck, shifting our focus to platform reliability instead of button-pushing.

But the most important lesson I learned wasn’t technical. It was this: automation only works if people trust it.

We designed the bot with (a lot of) guardrails:

- It only allowed the person at the top of the queue to deploy their pull request.

- It would only deploy fully approved pull requests.

- Pull requests were required to deploy to a staging environment successfully before production deployment was allowed.

- Semi-canary releases to one production shard were encouraged (though there was a way to override this guardrail for hot fixes).

- Rollback commands were one line away.

- Most importantly, the bot logged every action transparently in Slack.

Trust wasn’t automatic. Some engineers worried the bot might deploy the wrong code or miss a critical review. We paired every automated step with visible, human-readable confirmation in Slack to win them over. Transparency was the lever. Once people saw that the bot was consistent and often faster than DevOps handling deployments, they stopped worrying about the “how” and started relying on the outcome.

Those safety nets gave developers, DevOps, and management the confidence to hand over control. What was once a manual bottleneck became an automated, developer-driven workflow. It even had a few easter-eggs and some attitude, just for fun.

Those guardrails worked well when automation meant scripts and bots following our lead. But as systems grew, something else became clear: the commands themselves became the bottleneck.

Scaling ChatOps for Developer Autonomy

Shippy wasn’t just a side project that worked for one squad. It scaled. Within a few months, we rolled it out across our entire engineering department, which included about 80-100 people. Every team used the same core bot. Because the workflows were consistent, adoption felt natural.

There were some variations. A few groups had unique release requirements, some had integration tests that ran longer than others, and one team wanted its own release channel in Slack. We customized Shippy to respect those rules without breaking the shared experience. The balance was critical: one trusted framework, flexible enough for local needs.

That consistency made scaling possible. Instead of a patchwork of scripts or shadow tools, we had a single automation backbone that everyone trusted. Shippy was the shared backbone, and it helped free DevOps to stop firefighting and start thinking about platform reliability at a higher level.

We learned that automation can scale if it’s both consistent and adaptable. Teams face the same tension now with AI: consistency for trust and adaptability for local needs.

From ChatOps to AIOps

ChatOps gave us a new language for operations. Instead of digging through wikis and shell scripts, we typed a command in Slack, and a bot did the work. Deployments were faster, incidents were easier to coordinate, and teams could see what was happening in one place.

But ChatOps still relied on humans to drive—someone who knew what to ask for typed out every rollback, runbook, and command. Bots handled the execution, not the judgment.

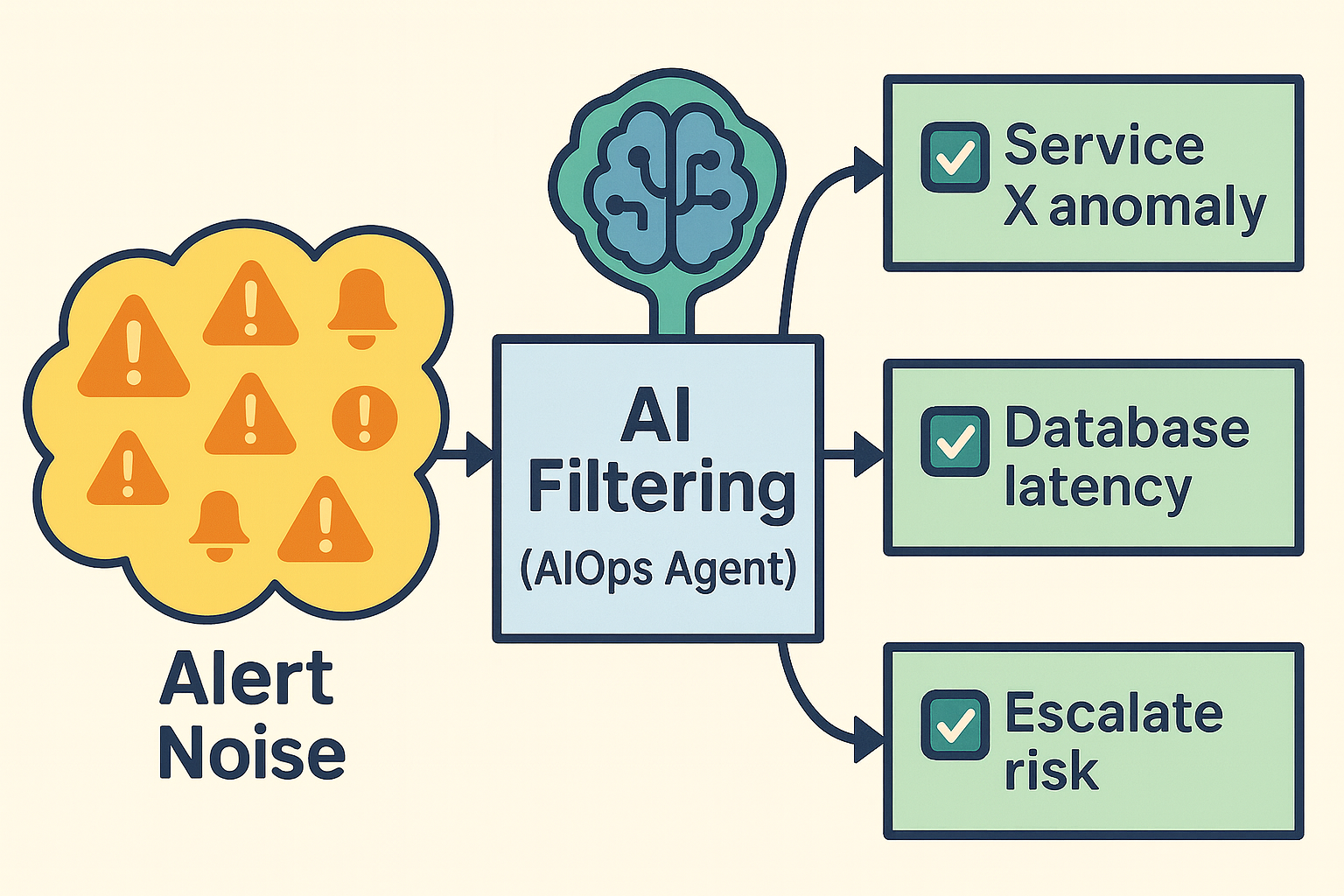

As systems grew more complex, that gap widened. Thousands of alerts, signals, and anomalies flooded in. No human could keep pace, even with bots standing ready to execute commands. What we needed wasn’t just faster fingers. We needed help deciding what to do next.

That’s where AIOps comes in: shifting from bots that wait for instructions to systems that surface insights, recommend actions, and stay within guardrails we define.

From bots waiting for instructions to systems proposing the next move, that shift is the real line between ChatOps and AIOps.

ChatOps vs. AIOps: Why the Lessons Still Matter

What seemed like a minor fix in a Slack channel turned out to be a blueprint. If you give people autonomy and wrap it in trust, they’ll ship faster and safer. That principle didn’t end with Shippy. It’s precisely what today’s AI-driven operations are rediscovering.

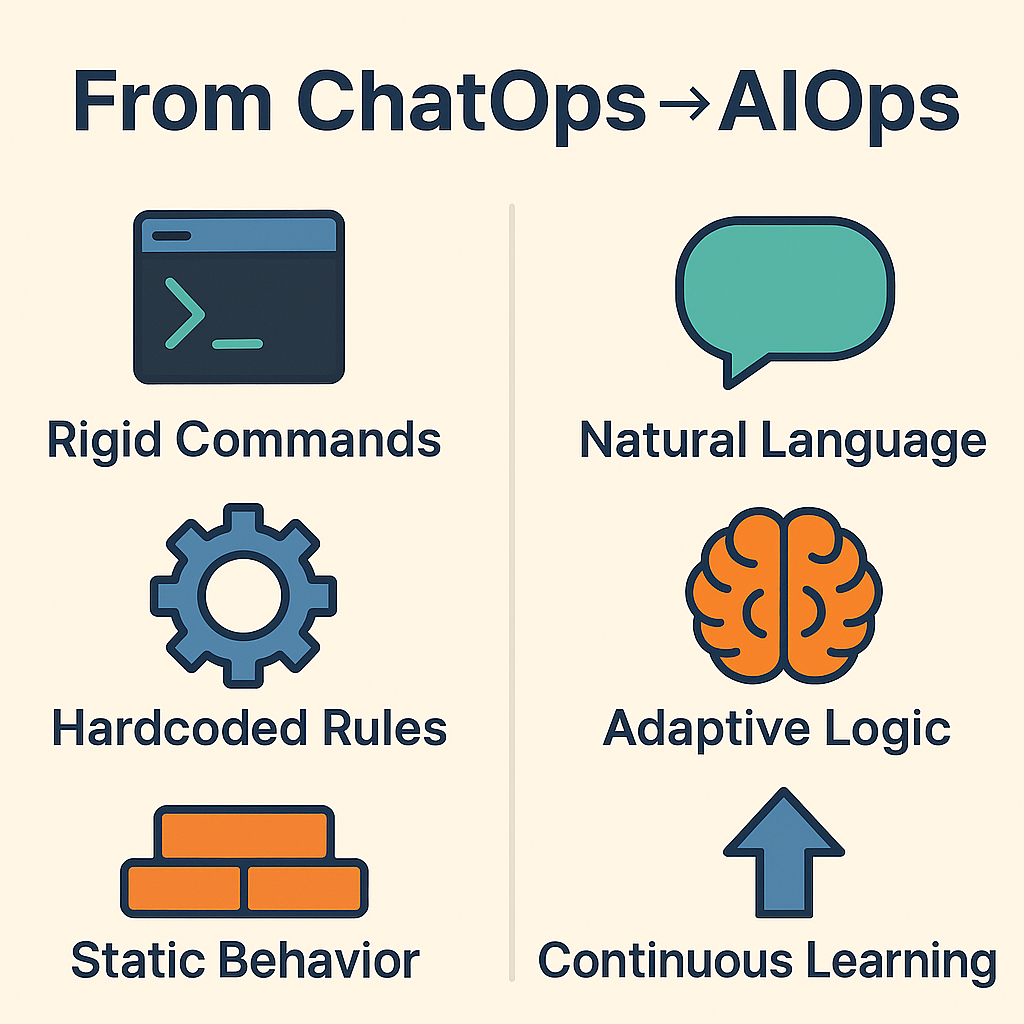

The parallels are unmistakable:

- Interpreting intent: Where our bot required rigid commands, AI can understand natural language: “Deploy service X to staging if tests pass.”

- Guardrails on decisions: Where we hardcoded rules, AI blends policies with adaptive logic: “Approve rollout if error budget isn’t at risk.”

- Continuous learning: Where our bot was static, AI can evolve, spotting anomalies, escalating risks, and even suggesting safer deployment strategies.

In other words, the technology shifts, but the principle doesn’t: push operations closer to the people doing the work, but design guardrails that make reliability visible at every step.

ChatOps vs. AIOps: At a Glance

AIOps isn’t “hands off.” It’s “hands on the rail.”

It’s one thing to describe the difference in words. It’s even clearer when you see the shift side by side.

| ChatOps | AIOps | |

|---|---|---|

| How work happens | Commands typed by humans | Suggestions generated by AI, executed with approval |

| Logic | Static runbooks & scripts | Adaptive logic bounded by policy (guardrails) |

| Trust model | “I typed it” transparency | “Why this?” explanation + risk estimate |

| Change control | Manual | Propose → Review → (Approve/Reject) |

| Failure mode | Fat-fingered commands | Silent agents unless guardrails + logging |

The table doesn’t tell the whole story, but shows the mindset shift at a glance.

Of course, a side-by-side chart only tells you how the models differ. The more complicated question is how to make AIOps trustworthy in practice.

Trust and Transparency: The Human Factor in Automation

Trust never arrives by default. Even when an automation saves time, people hesitate: Will it miss something? Will it behave reasonably and fairly? The reason Shippy worked wasn’t clever code; it was transparency. Every action a person took with Shippy, and its response, was posted in Slack, every decision was auditable, and rollback was always one line away. Shippy taught us that transparency wasn’t optional; it was the adoption strategy. Transparency was the lever that shifted skepticism into adoption.

That same dynamic shows up with AI. Teams will only adopt agents that make their reasoning visible and leave humans in control. A model that silently mutates infrastructure is a nightmare. A model that suggests, explains, and logs its actions can earn authentic human trust.

Building Trust in AIOps

If ChatOps forced us to make automation visible, AIOps forces us to make it accountable. The same principles apply, but at a higher scale and higher stakes.

Think back to the guardrails we put around deployment scripts and ChatOps bots. Those don’t disappear. They extend. What once protected a single service now has to constrain decisions across thousands of signals.

Guardrails for AIOps

Guardrails aren’t optional: they’re the difference between a co-worker and a black box.

- Scope: Limit blast radius (which services, environments, or failure domains AI can touch)

- Policy: Actions must align with explicit allowlists and organizational RBAC rules

- Explain: Every suggestion carries a rationale and a risk level in plain language

- Review: Require a human checkpoint for high-impact or high-risk decisions

- Log: Capture an auditable trail of inputs, outputs, and decisions for future debugging

AIOps can surface patterns and propose actions faster than any human. But without these safeguards, speed turns into opacity. With them, it becomes leverage.

AIOps in Practice: Reducing Alert Fatigue and Improving Incident Response

Every SRE knows the feeling: dashboards lighting up, alerts stacking faster than you can read them, and a war room full of noise.

Today, AIOps platforms promise relief by clustering signals, highlighting what matters, and keeping the process transparent through guardrails.

- PagerDuty AIOps groups related alerts so teams aren’t drowning in noise. Instead of responding to 50 pages, engineers see one consolidated incident, just like Shippy turned a chaotic queue into a single trusted source.

- Dynatrace AIOps , powered by Davis AI, maps dependencies and pinpoints root causes across billions of signals in seconds. It’s the guardrail of clarity: you don’t just see an error, you see where it started.

- New Relic AIOps uses predictive AI to highlight risks before they escalate. The value isn’t automation alone. It’s foresight paired with human judgment.

- Datadog AIOps blends anomaly detection with generative summaries. Instead of raw telemetry, you get actionable context, which is the difference between noise and narrative.

These tools are excellent, but they’re not perfect. They still rely on solid instrumentation and human review. But the pattern is consistent: automation succeeds when it reduces friction without removing visibility. What was true for a Slack bot in one department is still true for AI agents operating at global enterprise scale.

However, reducing noise and speeding up incidents are just the starting points. The bigger challenge is what happens next: how we govern AI-driven decisions so they stay aligned with our policies, our teams, and our risk tolerance.

The Future of AI in DevOps: Guardrails and Governance

The next decade of DevOps may hinge on one question: how do we re-negotiate trust between humans and intelligent systems?

I’ve seen firsthand how lightweight guardrails unlocked massive autonomy in traditional automation. With AI, those same principles won’t be enough. The guardrails ahead must be more adaptive, more transparent, and possibly even more human-centered than ever.

That raises sharper questions:

- Where should AI make decisions independently, and where must human judgment remain in the loop?

- How do we protect velocity without sacrificing governance?

- What new oversight will emerge when agents become part of the delivery pipeline?

Tomorrow’s guardrails may not just be about error budgets or rollbacks. They may need to include ethical constraints, explainability thresholds, and automated audits. The stakes are higher, and so is the need for systems that can justify their decisions in ways humans can trust.

We solved these challenges once on a small scale with ChatOps. Solving them again with AI will define the next era of DevOps. I believe trust will be the deciding factor.

If DevOps was about breaking down silos between development and operations teams, then the next wave is about breaking down silos between humans and intelligent systems, such as AI agents.

The future will not be defined by how much we automate but by how well we govern the intelligence we invite into our systems.

That’s where the real work begins.

Conclusion: Navigating the Next Wave

The future of DevOps will not be decided by the tools alone but by the guardrails and governance we put around them. AI can lighten the load, but trust is still earned through visibility, accountability, and human judgment where it matters most.

I’d love to hear your perspective:

- Where have AI-driven tools already reduced your ops burden?

- Where do you still draw the line for human oversight?

- What new guardrails will your team need as AI enters ops?

Sharing those stories will help us navigate this next wave of DevOps together.

FAQ: ChatOps, AIOps, and DevOps Automation

What is the difference between ChatOps and AIOps?

ChatOps connects people, tools, and processes through chat platforms like Slack or Teams. Think deployment bots or automated workflows triggered by commands. AIOps goes further by applying AI and machine learning to operations data, reducing alert fatigue, surfacing root causes, and suggesting remediation. Both aim to streamline DevOps automation , but AIOps adds intelligence on top of automation.

How do you build trust in automation within DevOps?

Trust comes from transparency and guardrails. In ChatOps , that meant logging every action in Slack and giving developers clear rollback options. In AIOps , it means explainable decisions, visible reasoning, and human override. Automation succeeds when teams can see why something happened and stay in control.

What problems does AIOps solve for DevOps teams?

The biggest win is reducing noise. Platforms like PagerDuty, Datadog, and Dynatrace group related alerts, highlight anomalies, and cut false positives. That helps engineers focus on incidents that matter, improving response time and reducing burnout. AIOps in Practice also accelerates root cause analysis, saving hours in complex environments.

Will AI replace humans in DevOps operations?

No, AI is best at handling repetitive, noisy, or high-volume tasks. Humans are still critical for judgment, context, and trade-offs. The future of AI in DevOps is “AI plus human”: agents handle routine signals, while engineers guide strategy, governance, and culture.